Finding more mobile-friendly search results

advanced beginner intermediate mobile search resultsWebmaster level: all

When it comes to search on mobile devices, users should get the most relevant and timely results, no matter if the information lives on mobile-friendly web pages or apps. As more people use mobile devices to access the internet, our algorithms have to adapt to these usage patterns. In the past, we’ve made updates to ensure a site is configured properly and viewable on modern devices. We’ve made it easier for users to find mobile-friendly web pages and we’ve introduced App Indexing to surface useful content from apps. Today, we’re announcing two important changes to help users discover more mobile-friendly content:

1. More mobile-friendly websites in search results

Starting April 21, we will be expanding our use of mobile-friendliness as a ranking signal. This change will affect mobile searches in all languages worldwide and will have a significant impact in our search results. Consequently, users will find it easier to get relevant, high quality search results that are optimized for their devices.

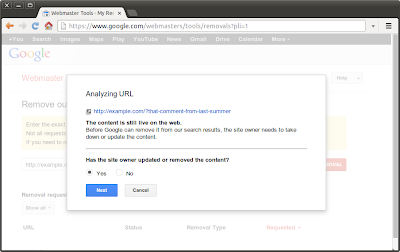

To get help with making a mobile-friendly site, check out our guide to mobile-friendly sites. If you’re a webmaster, you can get ready for this change by using the following tools to see how Googlebot views your pages:

- If you want to test a few pages, you can use the Mobile-Friendly Test.

- If you have a site, you can use your Webmaster Tools account to get a full list of mobile usability issues across your site using the Mobile Usability Report.

2. More relevant app content in search results

Starting today, we will begin to use information from indexed apps as a factor in ranking for signed-in users who have the app installed. As a result, we may now surface content from indexed apps more prominently in search. To find out how to implement App Indexing, which allows us to surface this information in search results, have a look at our step-by-step guide on the developer site.

If you have questions about either mobile-friendly websites or app indexing, we’re always happy to chat in our Webmaster Help Forum.

https://plus.google.com/+GoogleWebmasters/posts/1BzXjgJMGFU

https://plus.google.com/+GoogleWebmasters/posts/1BzXjgJMGFU https://plus.google.com/+GoogleWebmasters/posts/TMhfwQG3p8P

https://plus.google.com/+GoogleWebmasters/posts/TMhfwQG3p8P https://plus.google.com/+GoogleWebmasters/posts/AcUS4WhF6LL

https://plus.google.com/+GoogleWebmasters/posts/AcUS4WhF6LL https://plus.google.com/+GoogleWebmasters/posts/DUTpSGmkBUP

https://plus.google.com/+GoogleWebmasters/posts/DUTpSGmkBUP https://plus.google.com/+GoogleWebmasters/posts/UjZRbySM5gM

https://plus.google.com/+GoogleWebmasters/posts/UjZRbySM5gM